-

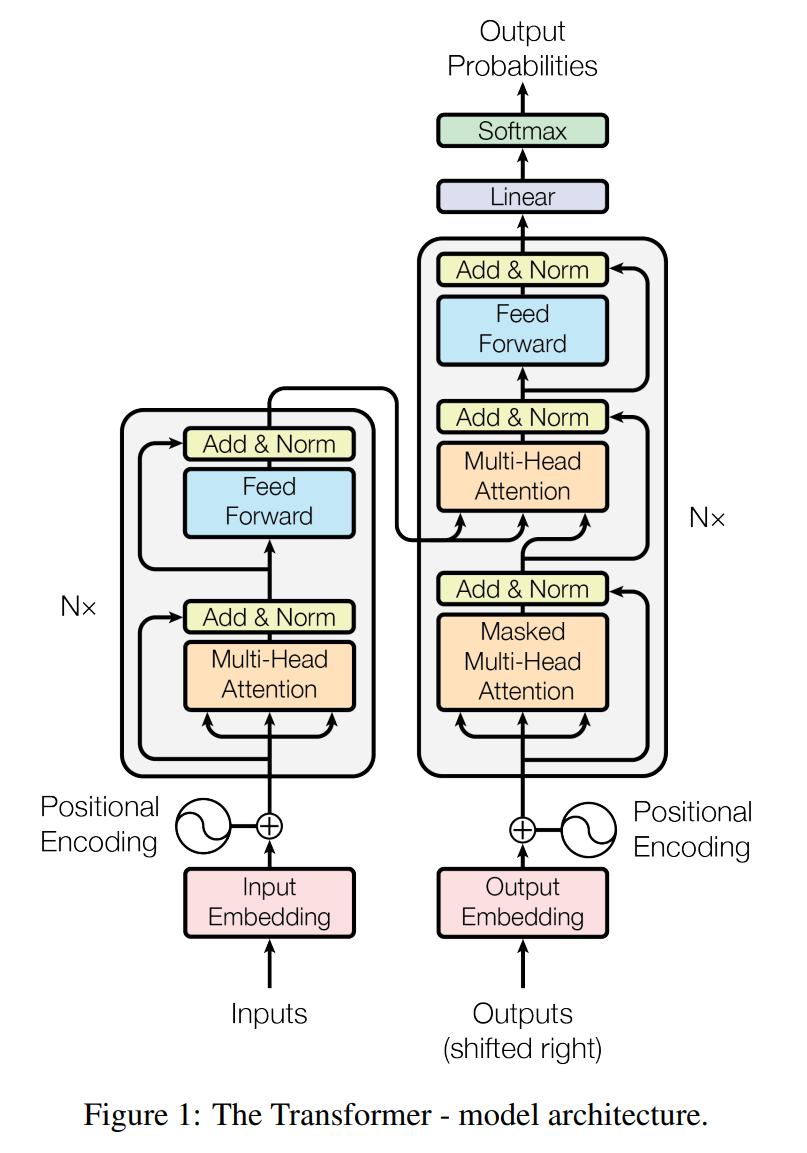

The use of self attention inside both encoder and decoder itself, not only encoder-decoder level “normal” attention

-

The clever positional encoding with sin and cos waves, and use residual blocks to propagate that information. You can think of it as a more concise way of binary encoding (for floats).

Transformer Architecture: The Positional Encoding

- Multi-head attention is kinda like general attention where a linear layer is used when combining key and query, but probably better, as we could have multiple key, value, query now.