Note the OG paper was not read though. I just read the blog post.

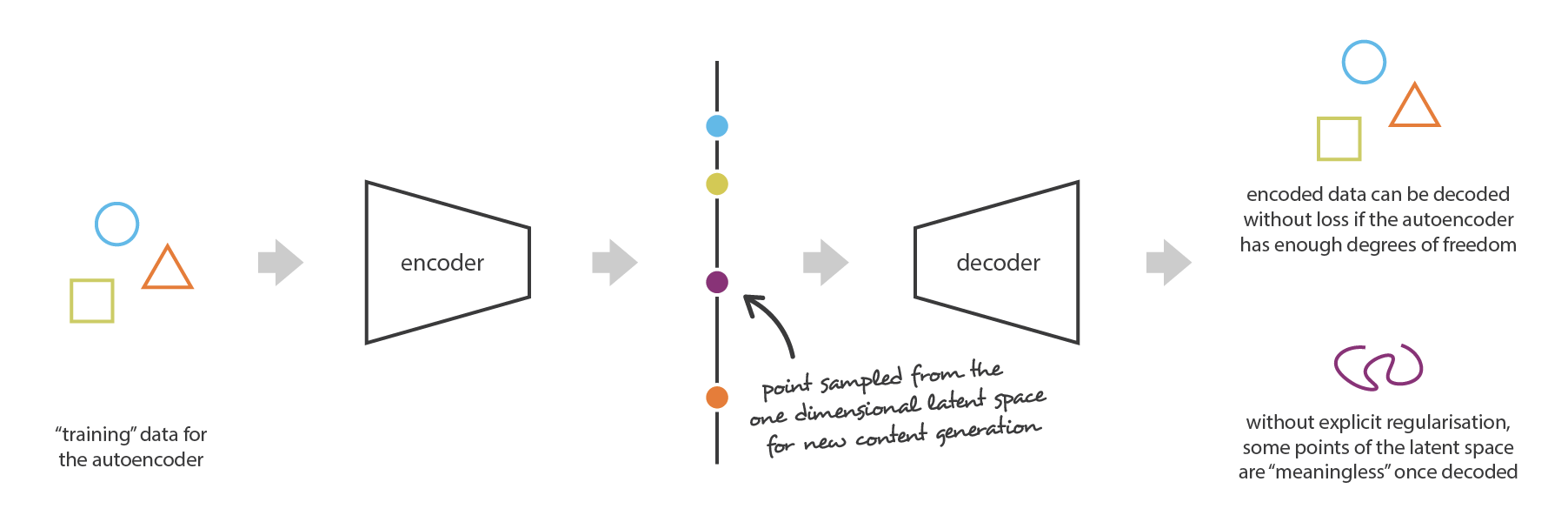

Auto encoder: easy, but may not produce what we want

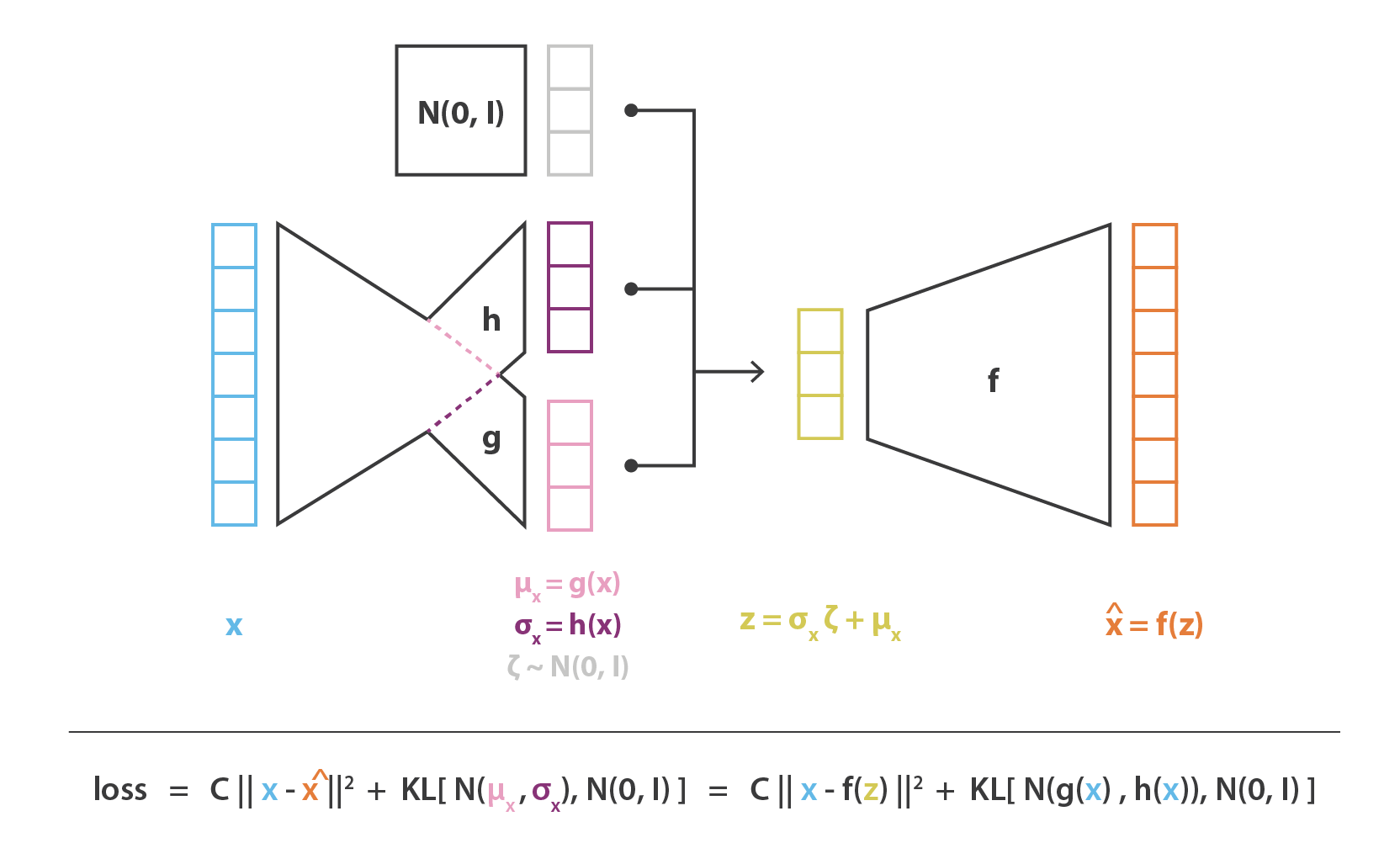

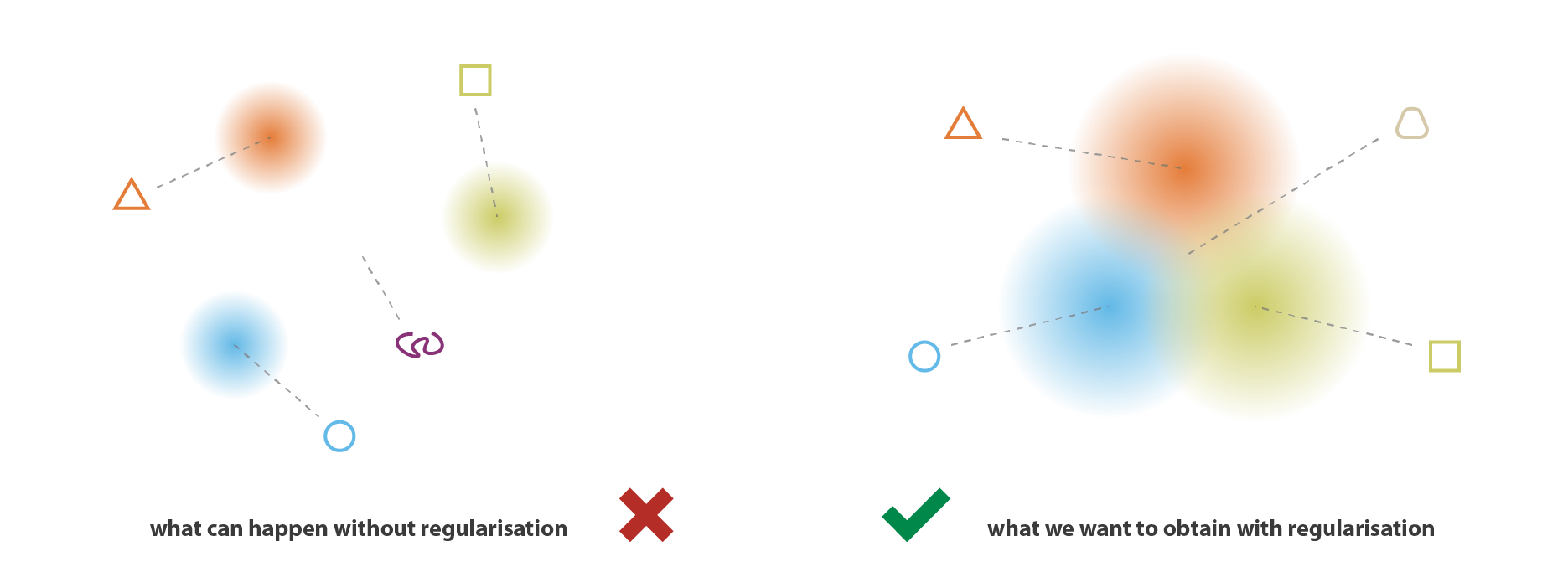

A variational autoencoder can be defined as being an autoencoder whose training is regularised to avoid overfitting and ensure that the latent space has good properties that enable generative process.

We want the distribution of encoder similar to a standard normal distribution (input → distribution → almost normal).

Note that and are both multi-dimension embeddings.